Meta's Above & Beyond Computer Science Program

Siva

Arrays & Strings

Why Arrays?

Pros

- Reading and writing in

O(1)time. - Occupies contiguous memory and can be completely loaded into CPU cache which makes them quickly accessible.

- Can be used as a backing data structure for other data structures like stacks, queues, heaps. These are known as abstract data types (ADT).

Cons

- Not dynamic, i.e. cannot add or remove elements. It is not designed to grow or shrink. Mostly for complied languages.

- Adding to full size array of

nelements, we need to create another new array of size2nand copy the items. - Creating a large array to solve the problem above, a lot of space is wasted.

Sorting Arrays

Two families of sorting algorithms:

O(n^2)O(nlog(n))

Properties of sorting algorithms:

- Stable: An algorithm that maintains the relative order of equal elements.

- Online: An algorithm that can be applied to a stream of data.

- In-Place: An algorithm that works on the same array as the input.

| Algorithm | Stable | Online | In-Place | Time Complexity | Space Complexity |

|---|---|---|---|---|---|

| Bubble Sort | Yes | No | Yes | O(n^2) |

O(1) |

| Selection Sort | No | No | Yes | O(n^2) |

O(1) |

| Insertion Sort | Yes | Yes | Yes | O(n^2) |

O(1) |

| Merge Sort | Yes | No | Yes | O(nlog(n)) |

O(n) |

| Quick Sort | No | No | No | O(nlog(n)) |

O(log(n)) |

| Heap Sort | no | No | No | O(nlog(n)) |

O(1) |

| Counting Sort | No | No | No | O(n+k) where k is the range of numbers |

O(1) |

Strings

Strings are special type of arrays, namely made out of characters.

Fascinating properties of strings in JavaScript:

- In JavaScript, strings are represented using a binary tree, specifically the rope data structure.

- Certain operations such as string concatenation are highly optimized,

O(1)amortized,O(log(n))without re-balancing instead ofO(n).

Recursion

Nothing much, basically get your base case and then your inductive step right. Recursive solution can almost always be implemented iteratively. But in many cases, it is harder and more error prone; typically, the added complexity outweighs the benefits.

Example:

def reverse(head: Optional[ListNode]) -> Optional[ListNode]:

if head is None or head.next is None:

return head

reversed_list = self.reverse(head.next)

head.next.next = head

head.next = None

return reversed_listTrees

For trees the most important things are some of the mathematical properties.

- Height:

h - Max Nodes of level l:

2^(l-1) - Max nodes of height h:

2^h - 1 - With N nodes, min height:

log(N+1)(base 2) - With k leaves, min height:

log(k) + 1(base 2) - Number of leaf nodes is always one more than nodes with two children

Linked List

Linked list are dynamic data structures that are made out of nodes.

| SINGLY LINKED LIST OPERATION | Time Complexity |

|---|---|

| Access i-th element | O(n) |

| Traverse all elements | O(n) |

| Insert element E at current point | O(1) |

| Delete current element | O(1) |

| Insert element E at front | O(1) |

| Insert element E at end | O(n) |

Stacks & Queues

Stacks follow LIFO (last in, first out) principle. i.e. the last element added is the first element to be removed.

In python, the list object is a stack by default.

Queues follow FIFO (first in, first out) principle. i.e. the first element added is the first element to be removed.

In python, the deque object is a double ended queue that can be used as a queue.

from collections import deque

# This function is used to insert the value in its argument to the right end of the deque.

append(item)

# This function is used to insert the value in its argument to the left end of the deque.

appendleft(item)

# This function is used to delete an argument from the right end of the deque.

pop()

# This function is used to delete an argument from the left end of the deque.

popleft()Heaps

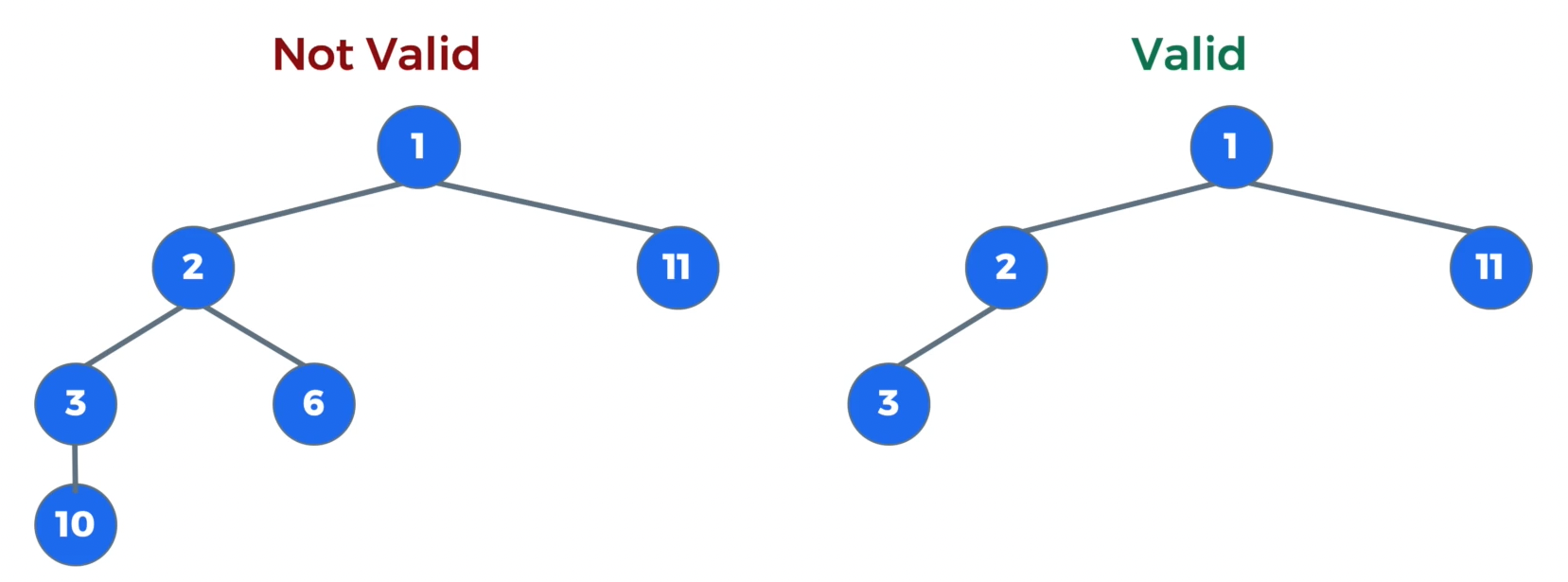

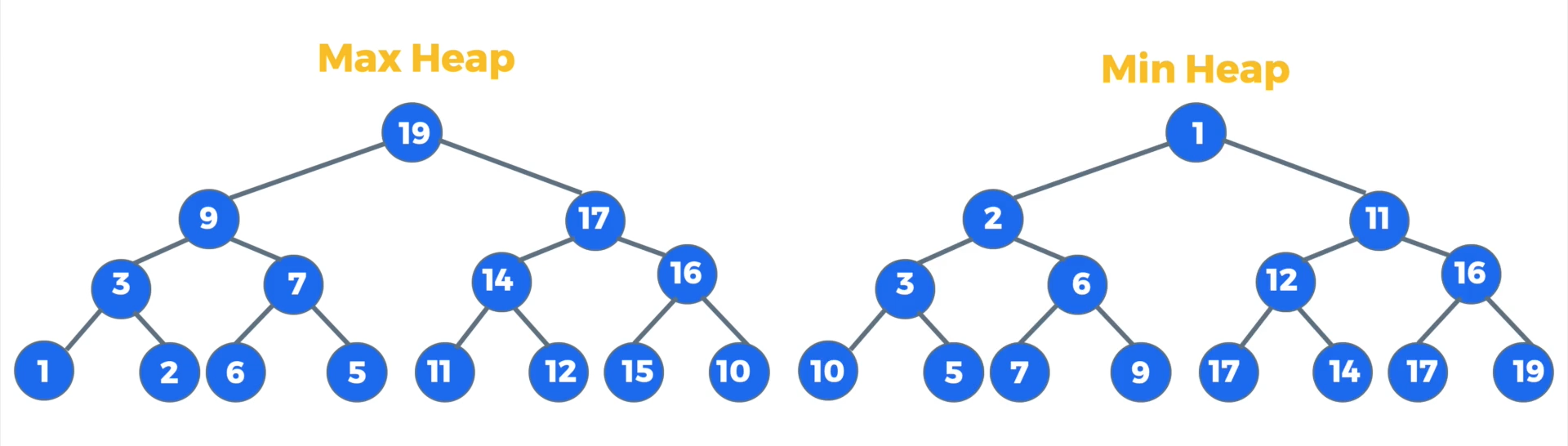

A heap is a binary tree with some additional properties.

- A heap is a complete binary tree. i.e. all levels are completely filled except the last one.

- Min-Heap: The key of each parent node is less than or equal to the key of its children.

- Max-Heap: The key of each parent node is greater than or equal to the key of its children.

Where are heaps used?

- Heapsort: In-place O(nlogn) time sorting algorithm.

- Selection algorithms: Algorithms that find the kth smallest or largest element in a list.

- Graph algorithms: Complex algorithms reduced to polynomial order runtime algorithms using heaps.

- Priority queues: A priority queue is a data structure that can be used to store and access elements in a sorted order.

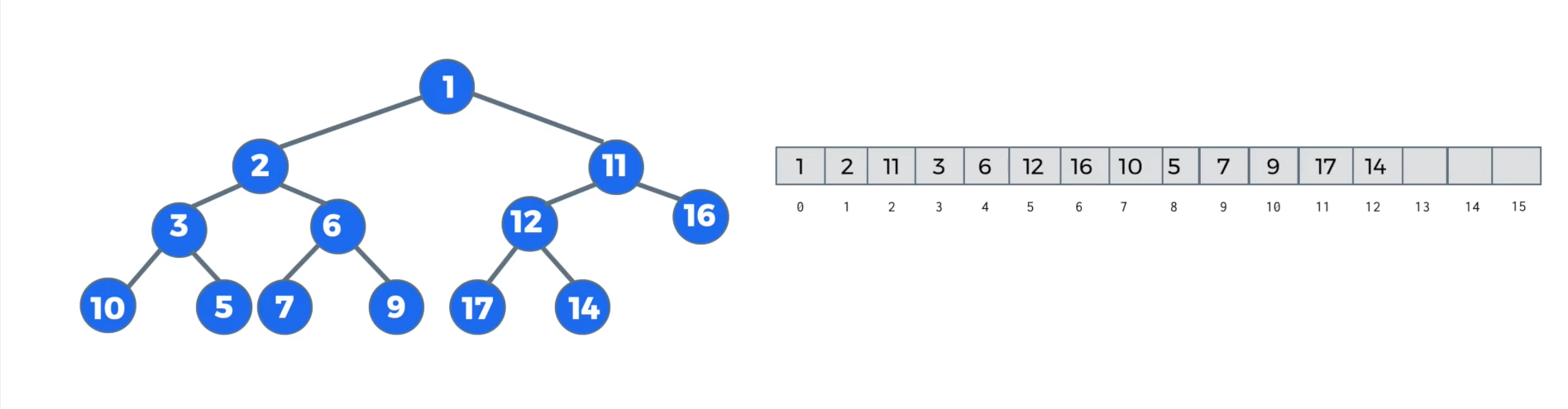

Implementing a heap: A heap is usually implemented as an array.

For an element at index i:

- Its left child is located at 2i + 1

- Its right child is located at 2i + 2

- Its parent is located at (i - 1) // 2

Implementation of a heap:

class Heap():

def __init__(self, heap = []):

self.heap = heap

self.__build_heap()

def add(self, el: int) -> None:

self.heap.append(el)

i = len(self.heap) - 1

# while el is less than its parent, swap them

while i > 0 and self.heap[self.__parent(i)] > el:

self.__swap(i, self.__parent(i))

i = self.__parent(i)

def delete(self, el: int) -> None:

if not self.heap:

return

i = self.heap.index(el)

# if the element is the last element, remove it and return

if i == len(self.heap) - 1:

self.heap.pop()

return

self.heap[i] = self.heap.pop()

while i < len(self.heap):

child = self.__left_child(i)

right_child = self.__right_child(i)

# if the right child is smaller than the left child, use the right child

if right_child < len(self.heap) and self.heap[right_child] < self.heap[child]:

child += 1

# if the child is >= the item bubbling down, break

if child >= len(self.heap) or self.heap[i] <= self.heap[child]:

break

# otherwise, swap the child and the item

self.__swap(i, child)

i = child

def getMin(self) -> int:

return self.heap[0]

def __build_heap(self) -> None:

# start at the last parent node and heapify, leaf nodes are already min-heaps

for i in range(len(self.heap) // 2 - 1, -1, -1):

self.__min_heapify(i)

def __min_heapify(self, i) -> None:

smallest = i

l = self.__left_child(i)

r = self.__right_child(i)

# if left child is smaller than the element at current index

if l < len(self.heap) and self.heap[l] < self.heap[smallest]:

smallest = l

# if right child is smaller than the element at current index

if r < len(self.heap) and self.heap[r] < self.heap[smallest]:

smallest = r

# if the smallest is not the current index, swap them and heapify the new affected subtree (for bubbling up/down)

if smallest != i:

self.__swap(i, smallest)

self.__min_heapify(smallest)

def __parent(self, i):

return (i - 1) // 2

def __left_child(self, i):

return 2 * i + 1

def __right_child(self, i):

return self.__left_child(i) + 1

def __swap(self, i, j):

self.heap[i], self.heap[j] = self.heap[j], self.heap[i]Using heaps:

- Heaps are used to implement priority queues. Getting the smallest and largest element can be done with python's

heapqmodule. Note thatheapqis a min-heap by default, negate the value to get the max-heap.

One interesting problem is to find the median of a stream of numbers.

class MedianFinder:

def __init__(self):

self.max_heap = [] # to store smaller half of the numbers

self.min_heap = [] # to store larger half of the numbers

def addNum(self, num: int) -> None:

# add the negative of the number to the max heap

heapq.heappush(self.max_heap, -num)

# offer the largest number of max_heap to min_heap

heapq.heappush(self.min_heap, -heapq.heappop(self.max_heap))

# if the heaps are of different sizes, make sure that the max_heap is always of greater size than the min_heap

if len(self.min_heap) > len(self.max_heap):

heapq.heappush(self.max_heap, -heapq.heappop(self.min_heap))

def findMedian(self) -> float:

# if the heaps are not of equal size, return the max_heap's top element, it will be median

if len(self.max_heap) > len(self.min_heap):

return -self.max_heap[0]

# otherwise, return the average of the top elements of the heaps

return (self.min_heap[0] - self.max_heap[0]) / 2

# Your MedianFinder object will be instantiated and called as such:

# obj = MedianFinder()

# obj.addNum(num)

# param_2 = obj.findMedian()Hash Tables

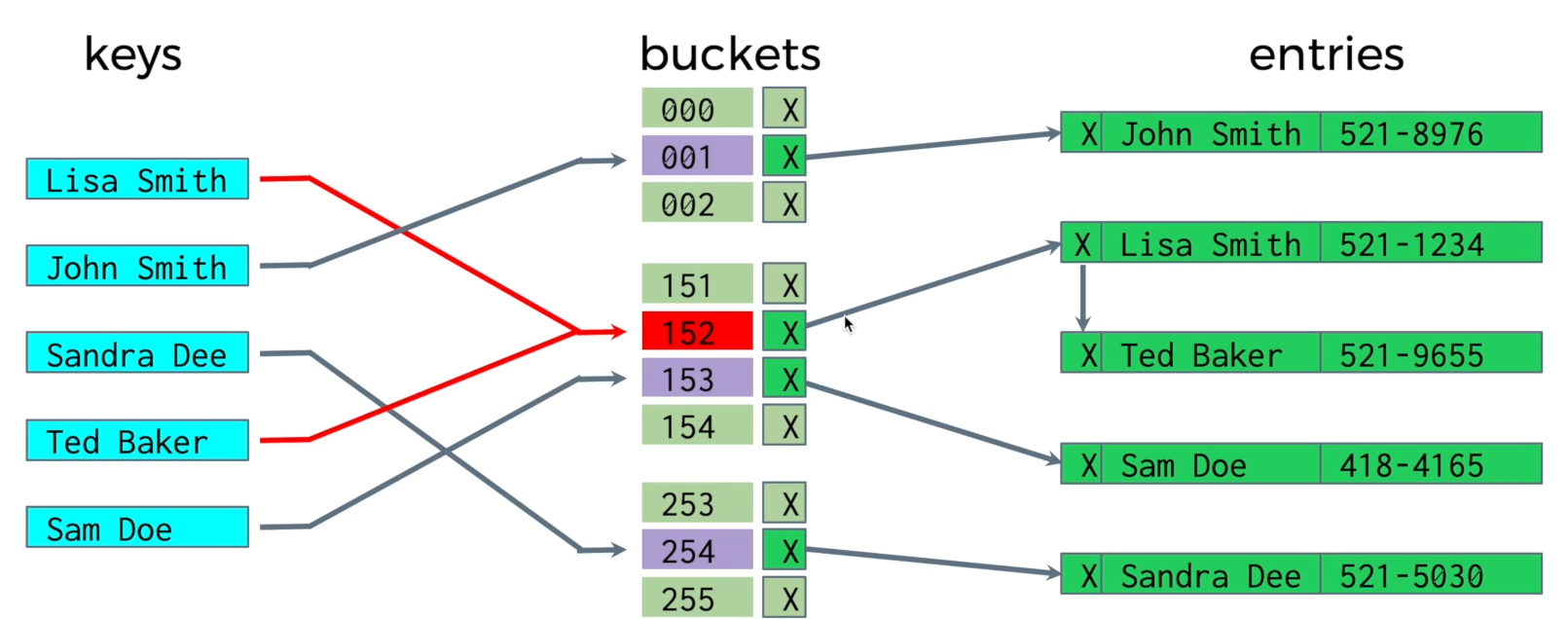

Hash tables are a data structure that can be used to store key-value pairs. A hash function is used to map keys to positions in an array.

Hash function should indeally compute in O(1) time. On average, lookups, insertions and deletion will be O(1 + n/m) time where n/m is the load factor.

- If the load factor grows large, the hash table will have to be resized. This is O(n + m) runtime.

- However, we assume that rehasing is rarely an issue outside of real time systems and we almost always assume that the rehasing occurs rarely.

Efficiency of hash tables depends on the hash function.

Hash tables can be used to implement:

- Sets (basically a hash table with no values and only keys)

- Tries

Graphs

A graph is a data structure that can be used to represent a set of nodes and edges between them. Graphs can be represented using adjacency lists, adjacency matrices, and adjacency vectors.

Different types of graphs are:

- Simple Graph: All edges are bidirectional.

- Directed Graph: Same as simple graph but might have some uni-directional edges.

- Cyclic Graph: A graph that has a cycle.

- Acyclic Graph: A graph that has no cycles. Trees are acyclic graphs by nature.

- Directed Acyclic Graph: A directed graph that has no cycles.